Challenge Program

Date

Oct 29, 2025

Location

Hayat, Dublin Royal Convention Centre

Schedule (tentative)

All times are in Dublin time zone (GMT, GMT+0).

| Time | Speaker | Title |

|---|---|---|

| 09:00 - 09:10 | Organizers | Opening and AV-Deepfake1M++ Dataset Description |

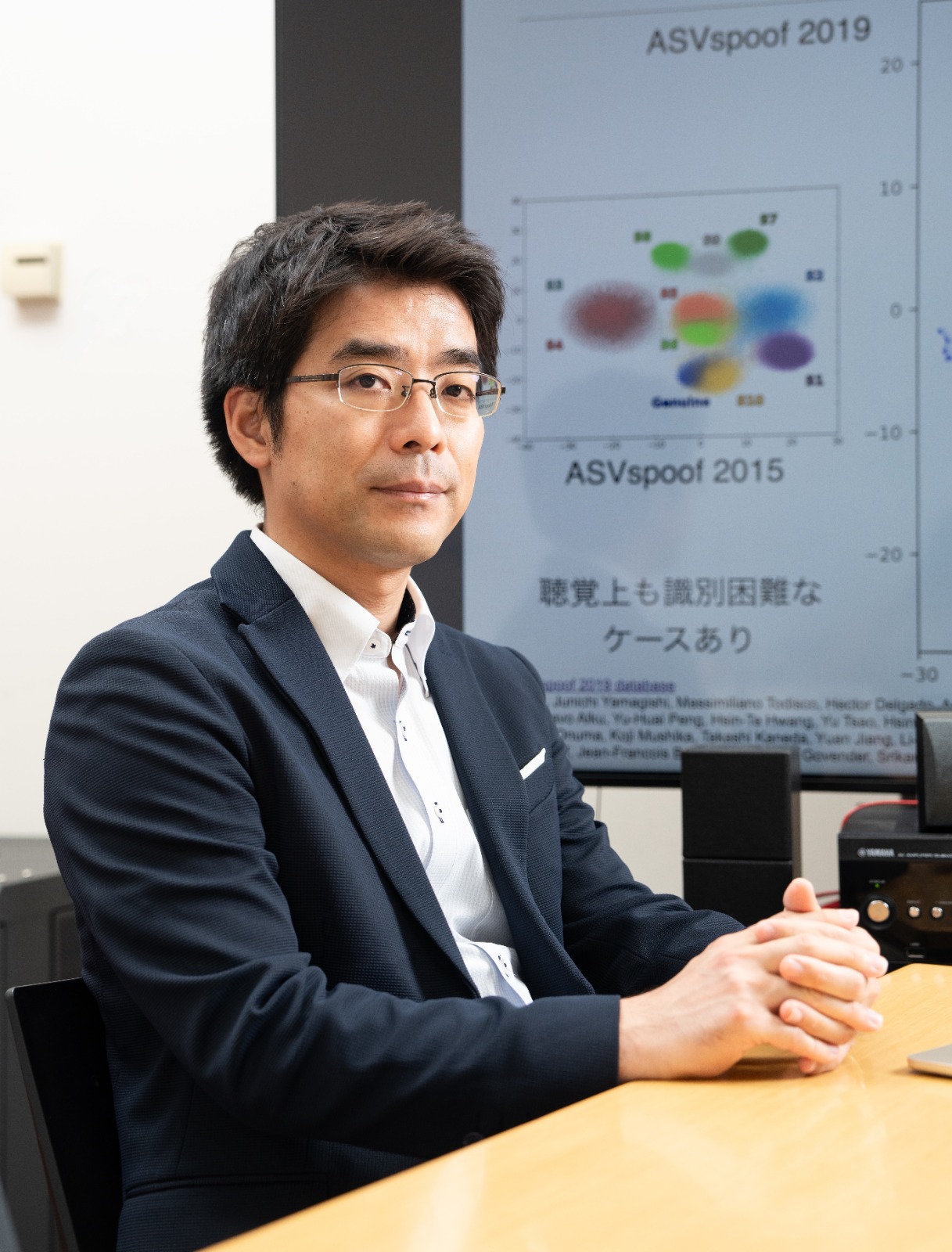

| 09:10 - 09:40 | Junichi Yamagishi | Keynote: Junichi Yamagishi |

| 09:40 - 09:55 | Pindrop | Paper 1: Pindrop it! Audio and Visual Deepfake Countermeasures for Robust Detection and Fine Grained-Localization |

| 09:55 - 10:10 | XJTU SunFlower Lab | Paper 2: HOLA: Enhancing Audio-visual Deepfake Detection via Hierarchical Contextual Aggregations and Efficient Pre-training |

| 10:10 - 10:25 | KLASS | Paper 3: KLASSify to Verify: Audio-Visual Deepfake Detection Using SSL-based Audio and Handcrafted Visual Features |

| 10:25 - 10:30 | Shreya Ghosh | Challenge Results and Future Work |

Speakers

Junichi Yamagishi

National Institute of Informatics

Bio: Junichi Yamagishi received a Ph.D. from the Tokyo Institute of Technology in 2006 for a thesis that pioneered speaker-adaptive speech synthesis. He is currently a Professor at the National Institute of Informatics in Tokyo, Japan. From 2011 to 2016, he held an EPSRC Career Acceleration Fellowship at the Centre for Speech Technology Research (CSTR), University of Edinburgh, U.K. Over the course of his career, he has authored or co-authored more than 400 peer-reviewed papers in international journals and conferences. His work includes Mesonet, the first study on deepfake facial video detection, and ASVspoof, a widely used benchmark database for training and evaluating deepfake audio detection models. Dr. Yamagishi has held numerous leadership and editorial roles. He served as an elected member of the IEEE Speech and Language Technical Committee (2013–2019), Associate Editor for the IEEE/ACM Transactions on Audio, Speech, and Language Processing (2014–2017), Chair of ISCA SynSIG (2017–2021), and Senior Associate Editor for the same IEEE/ACM journal (2019–2023). He was also a member of the APSIPA Technical Committee on Multimedia Security and Forensics (2018–2023), and has been serving on the IEEE Signal Processing Society Education Board since 2021. His contributions have been recognized with numerous prestigious awards, including the Itakura Prize from the Acoustical Society of Japan, the Kiyasu Special Industrial Achievement Award from the Information Processing Society of Japan, the Young Scientists’ Prize from the Minister of Education, Culture, Sports, Science and Technology, the JSPS Prize from the Japan Society for the Promotion of Science, and the Best Paper Award at the IEEE International Workshop on Information Forensics and Security (WIFS). He has also received the Docomo Mobile Science Award, the IEEE Biometrics Council’s BTAS/IJCB 5-Year Highest Impact Award, and the Award for Science and Technology (Research Category) from the Minister of Education, Culture, Sports, Science and Technology in 2010, 2013, 2014, 2016, 2017, 2018, 2023, and 2025.

Presentation Instructions

Please prepare a presentation for your accepted paper.

In every conference session, the organizers will provide a MacBook laptop with adapters, USB sticks (USB C & 3.2) and laser pointers, running Keynote, Preview and Microsoft Powerpoint. Please use this laptop; do not use your own. It is essential, for the smooth running of each session, that all speakers upload their presentations to the podium laptop BEFORE the session begins, so the organizers ask all speakers to arrive at their session at least 15 minutes before the scheduled start-time to meet the session chair and upload the presentation to the podium laptop.

Please send a copy of your presentation to parul@monash.edu, zhixi.cai@monash.edu and shreya.ghosh@uq.edu.au. You will need to bring your PowerPoint presentation on a USB with you to the Conference. If you have any video files in your presentation, please have these files saved separately on your USB.

More details: https://acmmm2025.org/information-for-presenters/